From Reactive to Proactive

Artificial intelligence (AI) encompasses a broad set of tools developed to perform tasks that have historically required human intelligence. The new generative AI tools, such as ChatGPT, are not programmed with a specific set of instructions; rather, they are trained on sets of data and algorithms that guide how they respond to prompts. We are increasingly using a range of AI tools—such as autopopulate suggestions, navigation systems, facial recognition on phones, and ChatGPT—in many aspects of our lives. Because of the prevalence and power of these tools, their rapid development, and their potential to be truly disruptive—in positive and negative ways—it is critical that school districts develop policies, guidelines, and supports for the productive use of AI in schools. Later in this commentary, we discuss many of the short-term positives and negatives of using AI in schools. The greatest impact of AI, however, is how it can transform teachers’ roles and student learning.

AI tools are already prevalent in schools, and there is no way to remove them. A survey by Impact Research in July found that 63 percent of teachers had used ChatGPT—currently the most prominent generative AI tool—in their work, and 84 percent of those teachers found it beneficial; 49 percent of parents support the use of ChatGPT in school; and 42 percent of students report having used it in school. District leaders must assert their agency in engaging with AI so that they can steer how it is used rather than find themselves constantly “putting out fires” caused by misuse and negative consequences.

These data show that AI has arrived in education, yet at the organizational level, districts currently lag their staff, students, and communities in reacting to this reality. If this does not change, it will essentially create a “Wild West” where those with resources are likely to make large gains and others will get left behind, which could enlarge the nation’s digital divide even further. Districts need to figure out both how to leverage AI for their own uses and how to prepare students for an AI-infused world, or their students will not be ready for a future that is rapidly arriving. To do this, districts need to take a more proactive role in navigating education in this new era of AI.

The central problem facing California districts that want to make the best use of AI is the many other demands for resources, especially time. Key issues include the lingering effects of the COVID-19 pandemic on students, the new mathematics framework, ongoing work on initiatives like Universal Transitional Kindergarten and community schools, and, for many, looming crises around chronic absenteeism and the financial implications of declining enrollment. Although AI is important, districts generally do not have the effort to launch a major initiative around it right now.

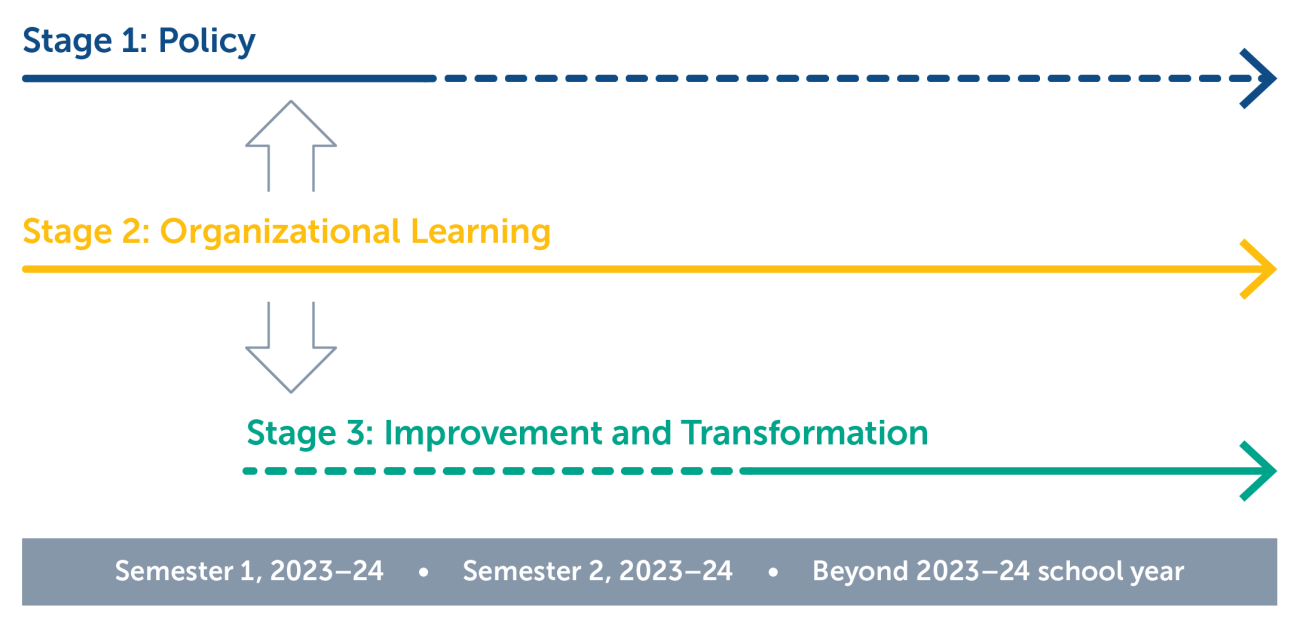

In July, we released a commentary that broadly advocated for district action around AI. In response to questions we received about what to consider and which resources might be helpful, we are building on that earlier piece by recommending a general approach for district engagement with AI during 2023–24 and beyond. We recommend that districts engage in three overlapping stages in district work on AI:

- Stage 1: Create policy to address the immediate risks so that AI does not undermine learning during the coming year.

- Stage 2: Facilitate organizational learning by making a small but strategic investment in harnessing the individual learning of the many educators already excited about AI.

- Stage 3: Promote improvement and transformation by making thoughtful decisions about how to spread AI equitably, once there is sufficient knowledge in the district to use resources wisely and balance risks and rewards.

Figure 1. Three Overlapping Stages of District Engagement Around AI

This staged approach recognizes the many challenges schools face as they address their core mission of educating students, but it also acknowledges that district inaction could lead to serious consequences as well as long-term opportunity costs, both of which outweigh what districts would invest in this staged approach.

Creating Policy Around AI

This fall, districts need to focus on addressing the immediate risks of AI. The greatest early risks are loss of academic integrity, violations of privacy, and unethical results of AI use. The key work of this first stage is twofold: (a) develop policies and guidelines around AI use and (b) provide training to ensure that staff have a basic understanding of AI and the district’s policies and guidelines. Districts’ AI policies and guidelines should include the following:

- Remove any broad bans on AI use or the use of specific tools (e.g., ChatGPT). One reason to remove bans is that attempting enforcement is a losing battle that may lead to bias in who gets punished for supposed cheating. Even though there are tools that claim to be able to detect AI use, detection currently (and in the foreseeable future) generates false positives and false negatives that create a risk of harm. AI has the potential to support educators and students alike in many ways, and policies and guidelines should enable educators to use AI safely and experiment with it in their classrooms.

- Create guidelines to preserve academic integrity. The guidelines should consider that students potentially have access to AI any time they are on a device or the internet unless they are being closely monitored. As a result, AI may affect how students approach learning, and its use should be considered in how students’ learning is assessed. Guidelines should help teachers understand how students could “cheat” with AI and how to adapt their classrooms to reduce these risks.

- Create a policy around privacy and AI use that is compliant with the Family Educational Rights and Privacy Act (FERPA) and Children’s Online Privacy Protection Rule (COPPA). Once an AI program has been trained on data, it cannot forget what it learned from those data. Companies with AI products may require users to allow any data submitted to be used for ongoing training of any tool (which could allow selling data to other entities), essentially making those data public. Therefore, districts should minimize the personal data collected for AI applications and anonymize or deidentify personal data whenever possible. To be compliant with FERPA, the policy should include prohibiting access by AI programs to confidential or personally identifiable data without explicit written permission from parents or eligible students. COPPA compliance will require different approaches for students younger than 13.

- Be aware of potential biases that arise from the AI training materials. All work produced by AI should be transparent, and people should be empowered to monitor it as well as be required to actively concur with or override AI outputs.

It will take districts a few months to adopt policies and create the regulations and processes to support their enactment (Stage 1), so it is critical that even before policies are set, districts provide professional development for educators and staff. This professional development should include basic information for all teachers on what AI is, how to preserve academic integrity, and how teachers, administrators, and staff can individually start exploring the use of AI in their work and classrooms. Through a concerted effort to support organizational learning (Stage 2), policy efforts should shift over time from mitigating risk to ensuring equitable improvements from AI use.

Turning Early Adopters’ Learning Into Organizational Learning

As soon as possible—even before finalizing their policies—districts need to enter a second stage focused on supporting and consolidating learning about AI that will facilitate the development of good policies as well as long-term improvement. We have already established that individual teachers and staff are exploring AI on their own. But AI tools are developing rapidly, and since district administrators may have little time to dedicate solely to AI, they need a way to harness the individual exploration of AI to support organizational learning. If districts coordinate learning successfully, this can support policy development, teachers’ learning across the board, and, ultimately, organizational improvement.

Districts can rapidly build system capacity for AI by using collaborative structures to support early adopters to innovate and share their learnings, so their exploration builds knowledge in the organization and informs future decisions about AI. The central district role in this stage is to appoint a person or team to lead AI work and task them with creating new structures and processes or reinvigorating existing ones to support teachers’ collaborative learning and the district’s consolidation of learning about AI. This is a great space for professional learning communities or other structures that can nurture innovators and test new ideas for the district.

Districts could coordinate learning on a range of issues around changes in instruction, strategies for teaching students how to use AI, and new ways of doing their own work. For example, districts could learn from teachers’ exploration of the following activities:

- Adapt assignments, assessments, and grading, which could include features like a scaffolded set of tasks; connections to personal and/or recent content for longer out-of-class assignments; in-class presentations to demonstrate content learned, regardless of if or how AI supported that learning; appropriate citations of AI (like any other source) showing what was used and how; and some skill assessments designed to remove the possibility of AI support.

- Require students to use AI for some assignments with the goal of showing students how to use AI to support their learning while teaching them about ethical use of AI.

- Explore uses of AI, including small-scale pilots of specific tools, to differentiate instruction based on students’ unique interests and needs (e.g., Individualized Educational Plan goals, supports for English language learners, more challenging assignments for students who are high performing in a domain, and reteaching and AI tutoring).

- Use AI to support teachers’ work, including lesson planning, giving feedback on students’ work, and reteaching concepts.

The district staff or team responsible for leading AI work should support early adopters by connecting them to resources and tools being developed by leaders in the field and distributing pilot projects—and consolidating learning from efforts—among interested teachers.

The district lead or team should also establish communication structures so that they can learn from the experience of early adopters as well as those who are more hesitant. Key voices to listen to include teachers, administrators, community members, and students. Based on learning from a diverse set of stakeholders, districts should have two main products from this stage, in addition to structures and processes that can support ongoing learning: (a) guidelines for use of AI in instruction that include how families and caregivers should support AI use outside the classroom, and (b) a policy around broader adoption of specific AI tools. Department and grade-level meetings, staff meetings, and events that connect families to the school are existing structures that can be used to communicate broadly about what innovators are learning and to explain and receive feedback on emergent guidelines and district policies around AI.

Using Learning to Guide Improvement and Transformation

Historically, many innovations in education stop at the second stage—with policies, guidelines, and training—but make relatively little substantial change to the status quo of teaching and learning. Because of how monumental the transformative power of AI is and how districts will be unable to prevent its influence on education, there are huge risks if districts do not move into Stage 3, which should focus on how to spread AI equitably to improve student outcomes while attending to equity and the potential human costs. It is important that all districts enter this stage where they make decisions about how AI should be used consistently in their systems to ensure that (a) students are prepared for the future, and (b) teachers, administrators, and staff integrate ethical use of AI into their roles and ways of working. Unlike in the previous stages, districts can enter Stage 3 more gradually, with leaders using the results of learning in Stage 2 as a bridge to the broader transformation that districts must realize to serve their students well in the long run.

The main risks of this phase are inequity, wasted resources, and harm to individuals and communities from ill-considered disruptions. Districts have the power and responsibility to ensure that all students’ learning is appropriately supported by AI and that those learning opportunities prepare them for the careers of the future. To meet this equity imperative, districts will have to reconsider current expectations for what students need to know and be able to do and why those are important. At some point in time, we expect that states will modify the standards and frameworks that set expectations for student learning. Some types of knowledge and skills will no longer be needed, at least in the same way. But if we use the calculator analogy, kids still will need to know how to add because basic arithmetic is a conceptually important step in understanding mathematics. Districts should examine their course offerings in terms of how they employ technology overall, how their courses prepare students for careers in using and developing technology, and how AI specifically should be used within each class—whether or not that class is itself a technology class.

Districts also need to improve the return on investment that they have traditionally received for educational technology. The uneven performance of educational technology despite massive past investments is why it is so critical that districts consolidate learning around the potential of specific tools and their uses as well as how to support educators in using those tools consistently and effectively in Stage 2. Piloting before broad adoption and recognizing that no tool is a silver bullet if used poorly are key principles in addressing this risk.

Finally, as we look hopefully to AI as a tool for improving education, we must attend to the fact that rapid change is hard and that historically, transformative moments in technology, from the Industrial Revolution to the advent of the internet, have come at the personal costs of some workers. Early in the exploration of bringing AI into districts, labor and management need to work together to create a shared vision of how AI should be used. As in other areas that we previously discussed, we believe that inaction in this domain is not a viable option because AI is already being used by teachers to do their work differently.

Fortunately, an existing and growing set of resources supports districts with this challenging and important work. To help guide national and local education systems in developing policies and translating policy into classroom practice, Code.org, ETS, the International Society for Technology in Education (ISTE), Khan Academy, and the World Economic Forum have launched a global initiative called TeachAI. This initiative has brought together a large coalition of international and state agencies (including the California Department of Education), education organizations (e.g., the National School Boards Association, Digital Promise, and the American Indian Science and Engineering Society), and technology organizations (e.g., Amazon, Meta, Microsoft, and OpenAI) to provide recommendations and resources that guide education leaders through each of the three stages described in this article. Although comprehensive tools like an AI policy toolkit, curriculum and assessment exemplars, and an AI literacy framework are currently being developed, these organizations already have resources available to meet the urgent needs of school leaders.

The rapid development of AI tools presents both opportunities and challenges for school districts. A staged approach can help districts responsibly integrate AI in a manner that avoids unnecessary risks while working towards transformative improvements. By creating foundational policies, leveraging innovators, and eventually reenvisioning roles, curriculum, pedagogy, and resource allocation, districts can harness AI’s potential to augment human capacities and create more effective and equitable learning environments. Collaboration and continued learning will be key. While uncertainty remains, maintaining a human-centered focus can help ensure that AI elevates the human connections at the heart of education.

Additional Resources

Although we have not conducted a comprehensive review of all possible resources (there are too many), the following are some resources and organizations we encountered in our work that could support district efforts:

- Because few K–12 districts have policies and resources of their own, we examined some from higher education. Duke University (as well as many other universities) has created a good resource to support its faculty and staff in thinking about the use of AI that clearly explains key concepts and provides links (many of which have more links) to other resources. Much of the content can be applied to TK–12 settings and used as a source for information and suggestions for policy and practice.

- A report from the U.S. Department of Education’s Office of Educational Technology gives a thoughtful overview of the main issues around using AI in schools.

- ISTE has released a variety of resources and is even working on a chatbot designed for educators.

- Khan Academy is piloting Khanmigo, an AI tutor and assistant. Other tools will join it in this market space (we are not endorsing this particular tool), but Khanmigo is a good one to start investigating the potential for AI to support differentiation because of the good information available about how these types of tools work.

- ETS has a dedicated AI Lab researching ways to enhance assessments.

- Code.org is spearheading a professional learning series to prepare educators for the upcoming year.

- TeachAI is developing a broad toolkit designed to support districts in this journey and anticipates releasing a series of resources, including an AI policy toolkit with sample policies and an implementation roadmap, curriculum and assessment exemplars, and an AI literacy framework, starting this fall.

- A California School Boards Association blog post from June 2022 provides additional links.

Gallagher, H. A., Yongpradit, P., & Kleiman, G. (August, 2023). From reactive to proactive: Putting districts in the AI driver's seat [Commentary]. Policy Analysis for California Education. https://edpolicyinca.org/newsroom/reactive-proactive